About this Project’s Structure

This project’s structure and software stack reflect my current (as of July 2023) approach to producing analytics that is transparent and reproducible. It incorporates years of experience as a model validator with common obstacles for stakeholders seeking to understand and use an analysis, and years of learning about how to apply software engineering best practices to minimize and remove them.

If you want to get your hands on the data and extend or reproduce my analysis, the instructions in Getting Started should get you there. If you’re curious to better understand how the project works and why I’ve made the choices I have, read through Motivation and Guiding Ideas below. The User Guide will take you deeper on how to use dvc, poetry, and sphinx effectively in this project or your own projects.

Motivations and Guiding Ideas

Reproducible history of all your analyses and results

It’s no secret that Data Science is an exploratory process, and that failed experiments can be as informative as successful ones. But in the real-world, with deadlines, endless meetings, and constantly-shifting requirements, only the most (ahem) detail-oriented analyst have the fortitude to keep an explicit log of what they tried, let alone ensuring those results are reproducible.

Analytical results are produced by applying code to data. To reproduce them, you need to apply the same code to the same data. Using source control tools like git to track the detailed revision history of code has become standard practice on most Data Science teams, but because these tools are not designed to track large datasets, it is rare to see systematic data versioning. This means that Data Scientists are typically tracking the alignment of code and data mentally during a project. If you’re not the author (or a few months have passed) it can be pretty tough to piece together what happened.

While they are not as widely adopted as code versioning tools, data versioning tools delegate this task to the computer and free the analyst from having to mentally track code/data alignment. This template uses DVC for data versioning because it requires no specialized infrastructure and integrates with all common data storage systems.

End-to-end reproducible pipelines

Training and evaluating a model is a complex workflow with many interdependent steps that must be run in the correct order. Typical, notebook-centric analyses leave this task dependency structure implicit, but there are major benefits to explicitly encoding them as a “Directed Acyclic Graph”, or DAG. Two key benefits are [1]

Consistency and Reproducibility: once the workflow’s structure has been encoded as a DAG, a workflow scheduler ensures that every step is executed in the correct order every time (unlike a tired Data Scientist working late to finish an analysis).

Clarity: DAGs can be visualized automatically, and these visualizations are intuitive even for non-technical audiences.

There are many DAG orchestration tools in widespread use by Data Scientists [2], but most require remotely hosted infrastructure and/or a significant learning curve to apply to an analysis project. In addition to its data versioning capabilities, DVC enables Data Scientists to build DAG workflows and transparently track their data versions without requiring any remotely hosted task orchestration infrastructure.

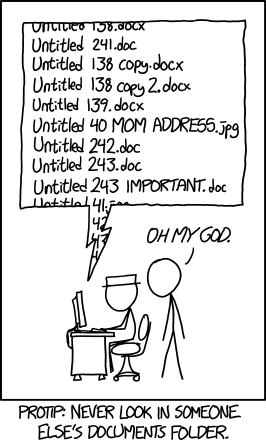

Every model build I’ve ever worked on has ended up looking something like this.

Optimize for faster iterations

If you’re still exploring what analysis to perform or how to execute it, manual and interactive analysis tools are powerful, allowing analysts to experiment with different approaches to find what works.

But over the lifecycle of a modeling project, there’s often a “phase transition” from experimenting with the scope and sequence of the analysis to repeating a well-defined sequence of steps over and over again with small variations as you make updates, fix bugs, and attempt to satisfy the curiosities of your stakeholders. Typically, at this stage the analysis has also become more complex and involves multiple interconnected steps. Manual execution becomes increasingly time-consuming, error-prone, and inefficient in handling repetitive tasks and managing variations.

If your analysis is not currently automated end-to-end, the quickest path to the next deliverable will always be to crank it out manually. But if you make the investment to automate your analysis from initial data pull to final results, you can test more hypotheses and learn faster over the lifespan of the project.

Starting from scratch, the necessary investment is prohibitive for many Data Science teams. But this template makes end-to-end automation with DVC much easier.

Notebooks are for exploration and communication

Notebook packages like Jupyter notebook and other literate

programming tools are very effective for exploratory data analysis. However, they are less

helpful for reproducing an analysis, unless the author takes special care to ensure they

run linearly end-to-end (effort that might be better spent refactoring the notebook into a

stand-alone script that can be imported for code re-use or scheduled in a DAG). Notebooks

are also challenging objects for source control (e.g. diffs of the underlying json format

are often not human-readable, and require specialized plugins to view in your source

control platform that your IT department may not be willing to install or support.)

The guidelines for use of notebooks in this project structure are:

Follow a naming convention that shows the owner and any sequencing required to run the notebooks correctly, for example

<step>-<ghuser>-<description>.ipynb(e.g.,0.3-ahasha-visualize-distributions.ipynb).Refactor code and analysis steps that will be repeated frequently into python scripts within the the

your_projpackage. You can import your code and use it in notebooks with a cell like the following;# OPTIONAL: Load the "autoreload" extension so that imported code is reloaded after a change. %load_ext autoreload %autoreload 2 from your_proj.data import make_dataset

Reproducible environment installation with Poetry

The first step in reproducing an analysis is always reproducing the computational environment it was run in. You need the same tools, the same libraries, and the same versions to make everything play nicely together. Python is notorious for making this difficult, even though there are a bunch of tools that are supposed to do this: pip, virtualenv, conda, poetry, etc.

A key thing to think about from a reproducibility standpoint to consider when choosing among these tools is subtle differences in their goals. pip and conda are designed to be flexible — they want you to be able to install a given package with as wide a range of versions of other packages in your environment as possible, so you can construct environments with as many packages as you need.

This is great news for productivity, but bad news for reproducibility, because by default they’ll install the latest version of any dependency that’s compatible with the other declared dependencies. pip and conda have the capability to “peg” requirements to specific versions, but that isn’t the default behavior and it requires quite a bit of manual work to use the tools that way, so people generally don’t do it.

For example, suppose you want to use pandas, and you create the following requirements.txt file and install it with pip install -r requirements.txt:

pandas==1.4.2

When you first run the pip install command, you might get version 1.20.0 of numpy installed to satisfy pandas requirements. However, when you run the pip install command again half a year later, you might find that the version of numpy installed has changed to 1.22.3, even though you are using the same requirements.txt file.

Worse, package developers are often sloppy about testing all possible combinations of dependency versions, so it’s not uncommon that packages that are “compatible” in terms of their declared requirements in fact don’t work together. An environment managed with pip or conda that worked a few months ago no longer works when you do a clean install today.

This template uses poetry to manage the dependency environment. poetry is a great choice from a reproducibility perspective because it assumes you want to peg all dependency versions by default and its priority is to generate the same environment predictably every time.[3]

Consistency and familiarity

A well-defined, standard project structure lets a newcomer start to understand an analysis having to spend a lot of time getting oriented. They don’t have to read 100% of the code before knowing where to look for very specific things. Well-organized code is self-documenting in that the organization itself indicates that function of specific components, without requiring additional explanation.

In an organization with well-established standards,

Managers have more flexibility to move team members between projects and domains, because less “tribal knowledge” is required

Colleagues can quickly focus on understanding the substance of your analysis, without getting hung up on the mechanics

Conventional problems are solved in conventional ways, leaving more energy to focus on the unique challenges of each project

New best practices and design patterns can quickly be projected to the team by updating the template.

A good example of this can be found in any of the major web development frameworks like Django or Ruby on Rails. Nobody sits around before creating a new Rails project to figure out where they want to put their views; they just run rails new to get a standard project skeleton like everybody else. Because that default project structure is logical and reasonably standard across most projects, it is much easier for somebody who has never seen a particular project to figure out where they would find the various moving parts. Ideally, that’s how it should be when a colleague opens up your data science project.

That said,

“A foolish consistency is the hobgoblin of little minds” — Ralph Waldo Emerson (and PEP 8!)

Or, as PEP 8 put it:

Consistency within a project is more important. Consistency within one module or function is the most important. … However, know when to be inconsistent – sometimes style guide recommendations just aren’t applicable. When in doubt, use your best judgment. Look at other examples and decide what looks best.

First-time Setup

If the tools used by this project template are not already part of your development workflow, you will have to go through a few installation and configuration steps before you run a project following this project structure. You should not need to repeat these steps again to work on another project created with this cookiecutter.

Python, Pyenv, and Poetry

Poetry is easy to use once set up, but it can be a little confusing to transition from other Python environment management tools, or to use it in the same environment as other tools. This section will guide you through the one-time setup to install a clean Python environment using pyenv and install Poetry.

Using pyenv isn’t necessary to use Poetry, but it’s a convenient way to manage multiple isolated Python versions in your development environment without a lot of overhead.

Running the following in the terminal to install pyenv:

$ curl https://pyenv.run | bash

Then add the following to your shell login script (~/.zshrc by default for Macs):

export PYENV_ROOT="\$HOME/.pyenv"

command -v pyenv >/dev/null || export PATH="\$PYENV_ROOT/bin:\$PATH"

eval "\$(pyenv init -)"

eval "\$(pyenv virtualenv-init -)"

If you’ve been using conda, and it’s configured to automatically activate your base conda enviornment on login, you’ll likely want to turn this off by running

$ conda config --set auto_activate_base false

Then log back into your shell and install poetry and the desired versions of python using

$ pyenv install <desired python version number>

$ pyenv global <desired default python version number>

$ curl -sSL https://install.python-poetry.org | python3 -

User Guide

Project Organization

├── Makefile <- Makefile with project operations like `make test` or `make docs`

├── README.md <- The top-level README for developers using this project.

├── data

│ ├── raw <- The initial raw data extract from the data warehouse.

│ ├── interim <- Intermediate data that has been transformed but is not used directly for modeling or evaluation.

│ ├── processed <- The final, canonical data sets for modeling and evaluation.

│ └── external <- Data from third party sources.

│

├── docs <- Documentation templates guiding you through documentation expectations; Structured

│ │ as a sphinx project to automate generation of formatted documents; see sphinx-doc.org for details

│ ├── figures <- Generated graphics and figures to be used in reporting

│ └── model_documentation <- Markdown templates for model documentation and model risk management.

│ └── _build <- Generated documentation as HTML, PDF, LaTeX, etc. Do not edit this directory manually.

│

├── models <- Trained and serialized models, model predictions, or model summaries

│

├── notebooks <- Jupyter notebooks. Naming convention is a number (for ordering),

│ the creator's initials, and a short `-` delimited description, e.g.

│ `1.0-jqp-initial-data-exploration.ipynb`.

├── references <- Data dictionaries, manuals, important papers, etc

│

├── pyproject.toml <- Project configuration file; see [`setuptools documentation`](https://setuptools.pypa.io/en/latest/userguide/pyproject_config.html)

│

├── poetry.lock <- The requirements file for reproducing the analysis environment, e.g.

│ generated with `poetry install`; see https://python-poetry.org/docs/

│

├── .pre-commit-config.yaml <- Default configuration for pre-commit hooks enforcing style and formatting standards

│ and use of a linter (`isort`, `brunette`, and `flake8`)

│

├── setup.cfg <- Project packaging and style configuration

│

├── setup.py <- Project installation script; makes project pip installable (pip install -e .) so

│ project module can be imported

│

├── .dvc <- Data versioning cache and configuration using dvc; see https://dvc.org

│ └── config <- YAML formatted configuration file for dvc project; defines default remote data storage cache location;

│

├── tests <- Automated test scripts;

│ └── data <- tests for data download and/or generation scripts

│ │ └── test_make_dataset.py

│ │

│ └── features <- tests for feature generation scripts

│ │ └── test_build_features.py

│ │

│ ├── models <- tests for model training and prediction scripts

│ │ │

│ │ ├── test_predict_model.py

│ │ └── test_train_model.py

│ │

│ └── visualization <- Scripts to create exploratory and results oriented visualizations

│ └── test_visualize.py

│

├── milton_maps <- Source code for use in this project.

│ │

│ ├── __init__.py <- Makes milton_maps a Python module

│ │

│ ├── data <- Scripts to download or generate data

│ │ ├── make_dataset.py <- Utility CLI script to extract data from database tables to local parquet files.

│ │

│ ├── features <- Scripts to turn raw data into features for modeling

│ │ └── build_features.py

│ │

│ ├── models <- Scripts to train models and then use trained models to make

│ │ │ predictions. (write outputs to `PROJECT_ROOT/models`

│ │ ├── predict_model.py

│ │ └── train_model.py

│ │

│ └── visualization <- Scripts to create exploratory and results oriented visualizations;

│ └── visualize.py (write outputs to `PROJECT_ROOT/reports/figures`

DVC How To…

Of the tools incorporated in this project template, DVC is likely the least familiar to the typical Data Scientist and has more of a learning curve to use effectively. The project has good documentation which is well worth reviewing in greater detail.

Below are a few of the most common DVC commands you will use frequently during model development, discussed in the order that they’re typically used in a standard workflow. Consult the DVC command reference for a full listing of commands and greater detail.

Check whether code and data are aligned: dvc status

dvc status shows changes in the project pipelines, as well as file mismatches either between the cache and workspace, or between the cache and remote storage. Use it to diagnose whether data and code are aligned and see which stages of the pipeline will run if dvc repro is called.

Align code and (previously-calculated) data: dvc checkout

dvc checkout updates DVC-tracked files and directories in the workspace based on current dvc.lock and .dvc files. This command is usually needed after git checkout, git clone, or any other operation that changes the current dvc.lock or .dvc files in the project (though the installed git hooks frequently automate this step). It restores the versions of all DVC-tracked data files and directories referenced in DVC metadata files from the cache to the workspace.

Define the pipeline DAG

You define the Directed Acyclic Graph (DAG) of your pipeline using the dvc.yaml. The individual scripts or tasks in the pipeline are called “stages”.

dvc.yaml defines a list of stages, the commands required to run them, their input data and parameter dependencies, and their output artifacts, metrics, and plots.

dvc.yaml uses the YAML 1.2 format and a human-friendly schema explained in detail here. DVC provides CLI commands to edit the dvc.yaml file, but it is generally easiest to edit it manually.

dvc.yaml files are designed to be small enough so you can easily version them with Git along with other DVC files and your project’s code.

Add a stage to the model pipeline

Let’s look at a smaple stage. It depends on a script file it runs as well as on a raw data input:

stages:

prepare:

cmd: source src/cleanup.sh

deps:

- src/cleanup.sh

- data/raw.csv

outs:

- data/clean.csv

A new stage can be added to the model pipeline DAG in one of two ways:

Directly edit the pipeline in

dvc.yamlfiles. (recommended)Use the CLI command

dvc stage add– a limited command-line interface to setup pipelines. For example:

$ dvc stage add --name train \

--deps src/model.py \

--deps data/clean.csv \

--outs data/predict.dat \

python src/model.py data/clean.csv

would add the following to dvc.yaml

stages:

prepare:

...

train:

cmd: python src/model.py data/model.csv

deps:

- src/model.py

- data/clean.csv

outs:

- data/predict.dat

Tip

One advantage of using dvc stage add is that it will verify the validity of

the arguments provided (otherwise stage definition won’t be checked until execution).

A disadvantage is that some advanced features such as templating are not available this way.

Calculate (or reproduce) pipeline outputs: dvc repro

dvc repro reproduces complete or partial pipelines by running their stage commands as needed in the correct order. This is similar to make in software build automation, in that DVC captures “build requirements” (stage dependencies) and determines which stages need to run based on whether there outputs are “up to date”. Unlike make, it caches the pipeline’s outputs along the way.

Define pipeline parameters

Parameters are any values used inside your code to tune analytical results. For example, a random forest classifier may require a maximum depth value. Machine learning experimentation often involves defining and searching hyperparameter spaces to improve the resulting model metrics.

Your source code should read params from structured parameters files (params.yaml by default). You can use the params field of dvc.yaml to tell DVC which parameter each stage depends on. When a param value has changed, dvc repro and dvc exp run invalidate any stages that depend on it, and reproduces them.

Run one or more pipelines with varying parameters: dvc exp run

dvc exp run runs or resumes a DVC experiment based on a DVC pipeline. DVC experiment tracking allows tracking of results from multiple runs of the pipeline with varying parameter values (as defined in params.yaml) without requiring each run to be associated with its own git commit[4]. It also runs the experiment using an isolated copy of the workspace, so that edits you make

while the experiment is running will not impact results.

Caution

The experiment tracking feature in DVC is dangerous/confusing to use with a dirty git repository. DVC creates a copy of the experiment’s workspace in .dvc/tmp/exps/ and runs it there. Git-ignored files/dirs are excluded from queued/temp runs to avoid committing unwanted files into Git (e.g. once successful experiments are persisted). Under some circumstances, unstaged, git-tracked files are automatically staged and included in the isolated workspace. The expected behavior isn’t 100% clear in the documentation, so I just avoid using dvc exp run in a dirty repo.

Tip

Rule of thumb: If you want to run the pipeline to test whether uncommited changes are

correct and ready to be committed, use dvc repro. dvc exp run is best used

with a clean repository where you want to “experiment” with results from parameter changes,

not code changes.

Visit the dvc documentation and their helpful hands-on tutorial to learn about the experiment-tracking features offered by DVC.

Record changes to code and data: dvc commit

dvc commit records changes to files or directories tracked by DVC. Stores the current contents of files and directories tracked by DVC in the cache, and updates dvc.lock and .dvc metadata files as needed. This forces DVC to accept any changed contents of tracked data currently in the workspace. [5]

Manage remote storage endpoints: dvc remote

dvc remote provides a set of commands to set up and manage remote storage: add, default, list, modify, remove, and rename.

Write or get data from remote storage: dvc push|pull

dvc push uploads tracked files or directories to remote storage based on the current dvc.yaml and .dvc files.

dvc pull download tracked files or directories from remote storage based on the current dvc.yaml and .dvc files, and make them visible in the workspace.

Manually track externally-created files: dvc add

dvc add tells DVC to track versions of data that is not created by the DVC pipeline in dvc.yaml. DVC allows tracking of such datasets using .dvc files as lightweight pointers to your data in the cache. The dvc add command is used to track and update your data by creating or updating .dvc files, similar to the usage of git add to add source code updates to git.

Tip

If a file is generated as a stage output of dvc.yaml, you do not need to run dvc add to track changes. dvc repro does this for you.

Review results metrics and their changes: dvc metrics show|diff

dvc metrics provides a set of commands to display and compare metrics: show, and diff.

In order to follow the performance of machine learning experiments, DVC has the ability to mark stage outputs or other files as metrics. These metrics are project-specific floating-point or integer values e.g. AUC, ROC, false positives, etc.

In pipelines, metrics files are typically generated by user data processing code, and are tracked using the -m (–metrics) and -M (–metrics-no-cache) options of dvc stage add

Compare results of two different pipeline runs or experiments

It’s a good idea to use git tags to identify project revisions that you will want to share with others and/or reference in discussions.

$ git tag -a my-great-experiment [revision]

creates an annotated tag at the given revision. If revision is left out, HEAD is used.

You can compare pipeline metrics across any two git revisions with

$ dvc metrics diff [rev1] [rev2]

where rev1 and rev2 are any git commit hash, tag, or branch name.

DVC internal directories and files

You shouldn’t need to muck around with DVC’s internals to use the tool successfully, but having a high-level understanding of how the tool works can increase your confidence and help you solve problems that inevitably arise. See the DVC documentation for more detail.

DVC creates a hidden directory in your project at .dvc/ relative to your project root folder, which contains the directories and files needed for DVC operation. The cache structure is similar to the structure of a .git/ cache folder in a git repository, if that’s something your familiar with.

.dvc/config: This is the default DVC configuration file. It can be edited by hand or with thedvc configcommand..dvc/config.local: This is an optional Git-ignored configuration file, that will overwrite options in.dvc/config. This is useful when you need to specify sensitive values (secrets) which should not reach the Git repo (credentials, private locations, etc). This config file can also be edited by hand or withdvc config --local..dvc/cache: Default location of the cache directory. By default, the data files and directories in the workspace will only contain links to the data files in the cache. Seedvc config cachefor related configuration options, including changing its location.

Important

Note that DVC includes the cache directory in .gitignore during initialization. No data tracked by DVC should ever be pushed to the Git repository, only the DVC files (*.dvc or dvc.lock) that are needed to locate or reproduce that data.

.dvc/cache/runs: Default location of the run cache..dvc/plots: Directory for plot templates.dvc/tmp: Directory for miscellaneous temporary files.dvc/tmp/updater: This file is used to store the latest available version of DVC. It’s used to remind the user to upgrade when the installed version is behind..dvc/tmp/updater.lock: Lock file for.dvc/tmp/updater.dvc/tmp/lock: Lock file for the entire DVC project.dvc/tmp/rwlock: JSON file that contains read and write locks for specific dependencies and outputs, to allow safely running multiple DVC commands in parallel.dvc/tmp/exps: This directory will contain workspace copies used for temporary or queued experiments.

DVC pre-commit hooks

make initialize installs several DVC pre-commit hooks to simplify your DVC+git workflow.

The post-checkout hook executes

dvc checkoutafter git checkout to automatically update the workspace with the correct data file versions.The pre-commit hook executes

dvc statusbefore git commit to inform the user about the differences between cache and workspace.The pre-push hook executes

dvc pushbefore git push to upload files and directories tracked by DVC to the dvc remote default.

Poetry How To…

Add software dependencies to the project

The poetry add command adds required packages to your pyproject.toml and installs them. This updates pyproject.toml and poetry.lock, which should both be committed to the git repository.

If you do not specify a version constraint, poetry will choose a suitable one based on the available package versions.

$ poetry add requests pendulum

# Allow >=2.0.5, <3.0.0 versions

poetry add pendulum@^2.0.5

# Allow >=2.0.5, <2.1.0 versions

poetry add pendulum@~2.0.5

# Allow >=2.0.5 versions, without upper bound

poetry add "pendulum>=2.0.5"

# Allow only 2.0.5 version

poetry add pendulum==2.0.5

Documentation How To…

Build the docs

Just run

$ make docs

from the project root and the documentation website will be generated in docs/_build/html.

Use advanced markup supported by MyST Parser

This project uses Sphinx combined with the MyST Parser Plugin to enable you to write documentation in markdown, but retain many of the powerful features Restructured Text (rst) format.

Check out the MyST plugin documentation for an overview of everything that’s possible.

Admonitions

You can create admonitions

:::{tip}

Let's give readers a helpful hint!

:::

produces

Tip

Let’s give readers a helpful hint!

Equations

You can add equations in LaTex

:::{math}

:label: mymath

(a + b)^2 &=& a^2 + 2ab + b^2 \\

&=& (a + b)(a + b)

:::

produces

You can reference them too!

Equation {eq}`mymath` is a quadratic equation.

produces

Equation (1) is a quadratic equation.

Footnotes

You can also make footnotes

Look at this footnote [^example-footnote]

[^example-footnote]: This is a footnote.

produces

Look at this footnote [6]

Serve the documentation website

To build and share the documentation website:

make docsbuilds the project documentation todocs/_build/html.make start-doc-serverruns an http server for the documentation website.make stop-doc-serverstops a running http server.